We know it can be hard to separate fact from fiction when it comes to AI and data privacy. In talking with educators and district leaders, we often hear the same important questions. Here’s a look behind the scenes at how MagicSchool keeps your data safe.

MagicSchool follows strict privacy standards aligned with FERPA, COPPA, and SOC 2. We never sell, store, or train on personally identifiable information (PII). Built for education from the ground up, MagicSchool prioritizes safety, transparency, and compliance in every classroom.

Does MagicSchool allow LLM providers like OpenAI to store or train on student data?

No. MagicSchool does not allow any large language model provider, including OpenAI, to store or train on educator or student data.

When MagicSchool tools use an LLM, each request is processed securely. Our providers are contractually required to delete data immediately after processing and are prohibited from storing or using it to train their models.

We also guide teachers and students to interact safely with AI inside our app. Before using MagicSchool, they’ll see in-app prompts with best practices, like checking for bias and accuracy, protecting privacy, not uploading PII, and treating AI as a tool, not a replacement for human thinking.

We maintain strict data classification to ensure that no personally identifiable information (PII) ever leaves the MagicSchool platform. Our privacy policy is fully aligned with FERPA, COPPA, and SOC 2 standards.

How does MagicSchool prove its AI data privacy claims?

We provide clear, detailed documentation for districts, administrators, and IT leaders explaining exactly how data is handled, including custom Data Privacy Agreements.

MagicSchool has earned the Common Sense Privacy Seal and undergoes regular third-party reviews and audits. Both Anthropic and OpenAI certify that MagicSchool operates with Zero Data Retention, and we maintain signed attestations verifying this.

Are other AI tools that use LLMs (Google, SchoolAI, Brisk, or Diffit) safer because they “don’t accept student data”?

Not necessarily. Saying a system that uses LLMs “doesn’t accept student data” usually means it limits functionality, not that it’s inherently safer.

MagicSchool is built to responsibly support teacher and student workflows with guardrails, moderation, and admin controls in place. Unlike other platforms, MagicSchool provides:

- System-level oversight for district leaders

- Central dashboards for monitoring usage

- Curriculum-aligned tools that improve instructional quality and consistency

Safety comes from responsible design and transparency.

What does “safe AI” actually mean at MagicSchool?

At MagicSchool, safety is our foundation.

- Data Safety: Student and teacher PII never leaves our system.

- Content Safety: Moderation filters prevent inappropriate or harmful outputs.

- System Safety: District admins control integrations, permissions, and visibility.

- Student Safety: Built-in human oversight ensures responsible use and accountability.

Educators and students deserve tools they can trust. Our white paper, The AI Safety Loop for Students, shares more about how we approach safety, transparency, and responsible AI use.

MagicSchool is the trusted AI platform built by educators, for education. We combine teacher-loved tools with enterprise-level security and compliance, giving districts the confidence to innovate safely.

To learn more about how MagicSchool protects educator and student data, read our full Privacy Policy or request our district documentation.

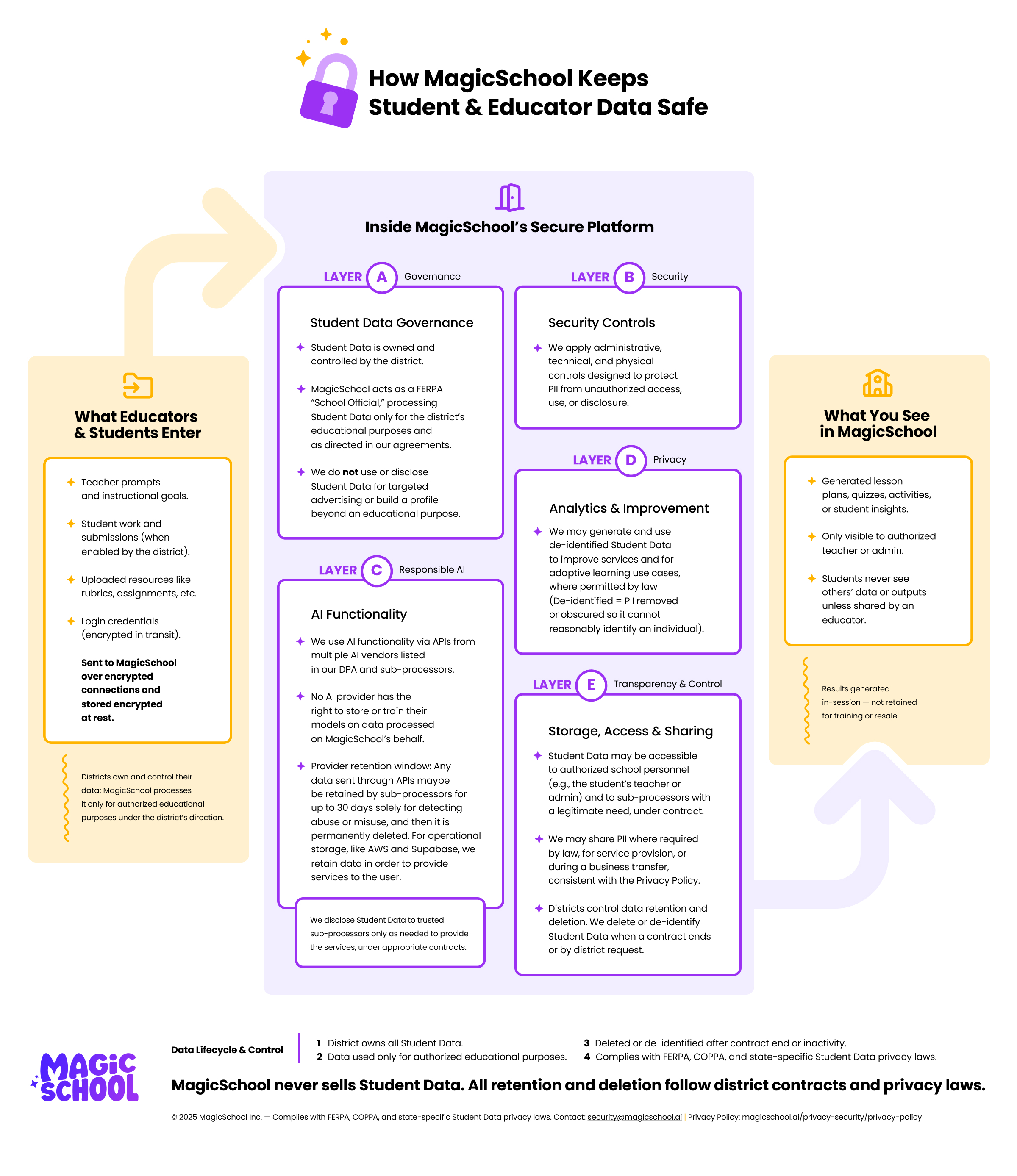

You can also download our Data Privacy Infographic.

.png)